Local Kubernetes Development with kind

kind is a tool built for running local Kubernetes clusters using Docker containers as nodes. kind was primarily designed for testing Kubernetes itself, but it is actually quite useful for creating a Kubernetes environment for local development, QA, or CI/CD. This blog post shows you how to setup a kind-based environment for local development that can mimic a production Kubernetes environment.

A fully functioning environment using kind includes a few different components. For our purposes, we will install the following list of software.

- Docker.

- The kubectl tool.

- A local Docker registry.

- kind.

- An Ingress controller.

We can use these steps to create a repeatable script to setup a local Kubernetes cluster whenever you need it.

Docker

The kind project stands for “Kubernetes in Docker”. As such, you will need to install Docker to get started. This is typically environment specific, and you may need to consult the Docker documentation if you get stuck. The following should get you started:

macOS

The easiest way to install Docker for macOS is using Docker Desktop by going to the download page and grabbing the image. Click-to-install and you should be ready to go.

Linux (Ubuntu)

Older versions of Docker were called docker, docker.io, or docker-engine. If these are installed you should start by getting rid of them:

| |

Once done, you can add the Docker apt repository to support future installations over apt. To do so, we need to first install packages necessary for installing from apt over HTTPS.

| |

Then, we can add the Docker apt repository by adding Docker’s GPG key and followed by adding the apt repository.

| |

Lastly, update the apt repository given the new Docker source, and install Docker.

| |

Windows with WSL2

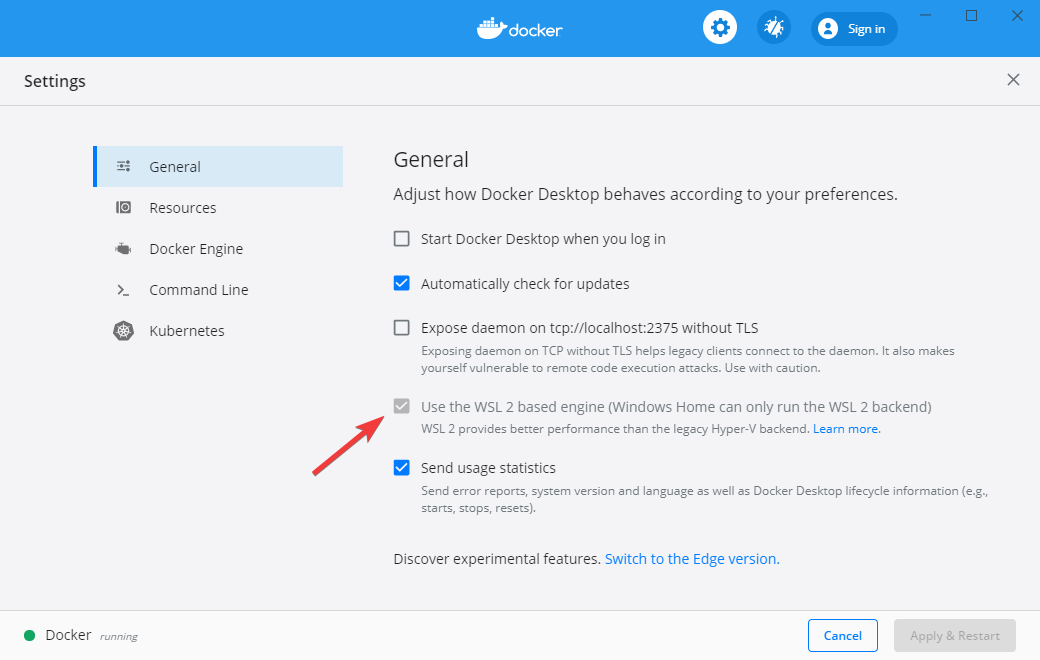

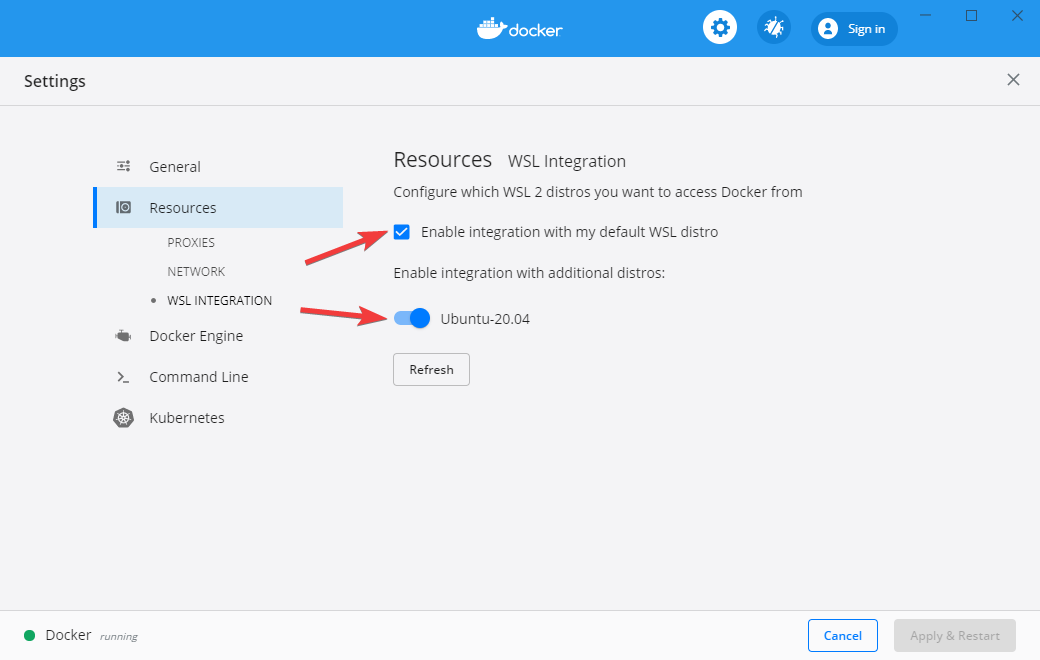

Newer versions of Windows include the Windows Subsystem for Linux 2 (WSL2) that provides excellent integration with Docker. To get started you first need to install WSL2 using the instructions here. Once this is done, you can install Docker Desktop.

After Docker Desktop is installed, open the Docker dashboard and make sure that WSL2 integration is enabled. This involves checking a few boxes: one for enabling WSL2 as the Docker engine backend, and a second for enabling WSL2 for the particular Linux images you are using.

Kubectl

kind does not strictly require kubectl, but because we are aiming to setup a fully functioning development environment we are going to install kubectl to be able to do some basic Kubernetes functions on our cluster.

If you get stuck at any point, refer to the official kubectl installation instructions.

macOS

On macOS, kubectl is available through Homebrew

| |

Linux (Ubuntu)

Linux users (and Windows users using WSL2) can fetch kubectl through the Google apt repository:

| |

kind

Now we can finally install kind. Kind publishes binaries to Github. These can be installed through Homebrew on a Mac, or by downloading the release for Linux distributions.

macOS

| |

Linux (Ubuntu)

| |

Creating Kubernetes clusteres using kind

kind is a tool for running local Kubernetes clusters using Docker containers as Kubernetes Nodes. To see how this work, let’s create a cluster with the default settings:

| |

By default, this will create a single Kubernetes node running as a docker container named kind-control-plane and configures kubectl to use this cluster. You can view the Docker container running your cluster through the docker ps command:

| |

Or through your newly configured kubectl.

| |

You can delete your cluster at any time using the kind delete cluster command:

| |

You can also run a specific version of Kubernetes using the --image flag. For example, to create a Kubernetes cluster using version 1.14.10 of Kubernetes you would use the following command:

| |

Adding more nodes to your cluster

By default, kind creates a cluster with a single node. You can add additional nodes using a yaml-based configuration file that follows Kubernetes conventions. A minimum viable configuration just specifies the type of resource to create (Cluster), and the apiVersion to use:

| |

By saving this configuration to a file, you can create a cluster using it through the --config flag.

| |

You can add more nodes to your cluster by altering this configuration. This creates a more “realistic” Kubernetes environment, but is really not necessary unless you are testing specific features like rolling updates.

| |

We can create a cluster using this configuration using the create cluster command.

| |

And view the three running nodes with kubectl.

| |

Go ahead and delete this cluster before continuing on.

| |

Creating a local Docker registry

One of the challenges in using Kubernetes for local development is getting local Docker containers you create during development to your Kubernetes cluster. Configuring this correctly allows Kubernetes to access any Docker images you create locally when deploying your Pods and Services to the cluster you created using kind. There are a few ways to solve this problem, but the one I prefer is to create a local Docker registry that your Kubernetes cluster can access. This version most closely matches a production deployment of Kubernetes.

The following example creates a Docker registry called kind-registry running locally on port 5000. This script first inspects the current environment to check if we already have a local registry running, and if we do not, then we start a new registry. The registry itself is simply an instance of the registry Docker image available on Docker Hub. We use the docker run command to start the registry.

| |

Now that we have a registry created, we can configure kind to use this registry for pulling container images during deployments. We do this using the configuration below. In this example, we run a single node Kubernetes cluster add some configuration to the containerd interface to allow pulling images from a local Docker registry.

| |

We can save this file as local-registry.yaml and then create the cluster using kind:

| |

The last step we have is to connect the kind cluster’s network with the local Docker registry’s network:

| |

Deploying an application to your cluster

Now that we have kind deployed and a local registry enabled, we can test the cluster by deploying a new service. For this demonstration, I’ve created a simple Python server using Flask, and a corresponding Dockerfile to package it for deployment to our Kubernetes cluster.

Here is our simple Python server:

| |

And the corresponding Docker file:

| |

You can build this application as a Docker container using the docker build command. The following line tags the build using the -t flag and specifies the local repository we created earlier.

| |

At this point, we have a Docker container built and tagged. Next we can push it to our local repository with the docker push command.

| |

You can check that this application is working by running the newly built Docker container and navigating to localhost:8080:

| |

Ingress into kind

Since a typical service needs to be accessed by the Internet, we will also run an Ingress controller to broker connections between our local environment and the Kubernetes cluster. We do this by adding a few extra directives to the configuration of our cluster and then deploying the nginx Ingress controller.

We start by create a kind cluster with extraPortMappings and node-labels directives.

- extraPortMappings allows

localhostto make requests to the Ingress controller over ports 80/443. This is similar to Docker’s-pflag. - node-labels restricts the Ingress controller to run on a specific set of nodes matching the label selector.

| |

You can save this yaml file as kind-ingress.yaml and create the cluster using the kind create cluster command:

| |

Our cluster is now capable of supporting Ingress controllers, so we can deploy one of the available options. In this tutorial I will use Nginx ingress which provides a deployment we can leverage through Github:

| |

Deploying our service

Finally we get to the good part: deploying our service into our local Kubernetes cluster! For this, we reference typically Kubernetes behaviour of creating a Service and an Ingress. The following yaml file references the Docker image for our service that we deployed to our local Docker registry:

| |

By saving this yaml file as service.yaml, we can deploy this to our cluster using kubectl:

| |

And verify that requests to localhost reach our server through the Ingress controller using curl:

| |

Putting Everything Together

This post has covered a lot of ground. Thankfully, each of these steps can be automated so we don’t have to start from scratch each time. You can use the following Bash script to create a Kubernetes cluster with Ingress any time. Use this for local development, QA, or continuous integration!

| |

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.