Exploring the Power of Elasticsearch: A Comprehensive Guide

Introduction:

In today's data-driven world, the ability to efficiently store, search, and analyze large volumes of information has become paramount. One technology that has gained significant popularity in this realm is Elasticsearch. Developed on top of the Apache Lucene search engine library, Elasticsearch is an open-source, distributed search and analytics engine known for its scalability, speed, and ease of use. In this article, we will delve into the importance of Elasticsearch, explore its real-time use cases, and provide a step-by-step guide to implementing it.The Importance of Elasticsearch:

Full-Text Search: Elasticsearch's advanced search capabilities enable lightning-fast full-text search across large datasets. Its relevance-based scoring system ensures accurate and precise results.

Distributed Architecture: Elasticsearch's distributed nature allows it to scale horizontally, providing high availability and fault tolerance. It supports sharding and replication, ensuring data reliability and seamless scalability.

Real-time Data Analysis: Elasticsearch excels at real-time analytics, enabling businesses to gain actionable insights from their data as it is ingested. It facilitates aggregations, filtering, and complex querying, empowering organizations to make data-driven decisions quickly.

Log and Event Data Analysis: With its integration with the Elastic Stack (formerly ELK Stack), Elasticsearch becomes a central component for log analysis and monitoring. It efficiently processes logs and events, providing valuable insights into system performance, security, and troubleshooting.

Recommendation Systems: Elasticsearch's powerful querying capabilities make it ideal for building recommendation systems. By leveraging collaborative filtering and content-based filtering techniques, Elasticsearch can suggest personalized recommendations based on user preferences and behavior.

Real-Time Use Cases for Elasticsearch:

E-commerce Search: Elasticsearch powers the search functionality in numerous e-commerce platforms. It enables users to search for products with blazing-fast response times, robust filtering options, and relevance-based results.

Logging and Monitoring: Elasticsearch, when combined with Logstash and Kibana, forms the Elastic Stack. This stack is widely used for log analysis, monitoring, and centralized logging in large-scale environments.

Fraud Detection: Elasticsearch's ability to ingest and process large volumes of data in real-time makes it an excellent choice for fraud detection systems. It enables quick anomaly detection and pattern recognition, helping organizations identify and prevent fraudulent activities.

Content Management Systems: Elasticsearch's full-text search capabilities make it a preferred choice for content management systems. It allows users to quickly search through documents, articles, or any textual content with high accuracy and speed.

Implementing Elasticsearch - Step by Step:

Step 1: Install Elasticsearch:

Extract the downloaded package to a directory of your choice.

Open a terminal or command prompt and navigate to the Elasticsearch directory.

Run the Elasticsearch server by executing the following command:

On Linux/macOS: bin/elasticsearch

Step 2: Configure Elasticsearch:

Customize the configuration based on your requirements. Some important settings to consider:

Node name: node.name

Network host: network.host (set to 0.0.0.0 to allow remote connections)

Port: http.port (default is 9200)

Save the configuration file.

Step 3: Verify Elasticsearch Installation:

If Elasticsearch is running correctly, you should see a JSON response containing cluster and version information.

Step 4: Install Fluentd:

On CentOS/RHEL: curl -L https://toolbelt.treasuredata.com/sh/install-redhat-td-agent4.sh | sh

Step 5: Configure Fluentd:

Modify the configuration to match the following example, which sends logs to Elasticsearch:

<source> @type tail path /path/to/your/log/file.log tag myapp.logs format none read_from_head true </source> <match myapp.logs> @type elasticsearch host localhost port 9200 logstash_format true </match>

Save the configuration file.

Step 6: Start Fluentd:

On CentOS/RHEL: sudo systemctl start td-agent

Step 7: Install Kibana:

Extract the downloaded package to a directory of your choice.

Open the Kibana configuration file located at config/kibana.yml.

Customize the configuration, if necessary. Some important settings to consider:

Save the configuration file.

Step 8: Start Kibana:

On Linux/macOS: bin/kibana

Step 9: Access Kibana Web Interface:

Kibana's web interface should appear, allowing you to configure visualizations, dashboards, and search indices.

Congratulations! You have successfully installed and configured Elasticsearch and the EFK stack (Elasticsearch, Fluentd, Kibana). You can now start sending logs to Fluentd, which will index them into Elasticsearch. Kibana provides a user-friendly interface to explore and visualize your log data.

Conclusion:

Real-time Devops Interview Questions and Answers

Q: What is the role of a DevOps engineer in an organization?

A: A DevOps engineer is responsible for implementing and managing the tools, processes, and infrastructure required to support the continuous delivery and deployment of software applications. They work closely with development and operations teams to facilitate collaboration and communication and ensure that software is released quickly and reliably.

Q: What is the difference between Continuous Integration and Continuous Deployment?

A: Continuous Integration (CI) is the practice of frequently merging code changes into a shared repository and running automated tests to ensure that the changes are compatible with the existing codebase. Continuous Deployment (CD) goes a step further by automatically deploying code changes to production environments after they pass through a series of automated tests and checks.

Q: What is Infrastructure as Code (IaC)?

A: Infrastructure as Code (IaC) is a DevOps practice that involves managing and provisioning infrastructure resources using code rather than manual processes. This allows for version control, collaboration, and automation of infrastructure management tasks.

Q: How do you implement automation in a DevOps environment?

A: Automation can be implemented in a DevOps environment by using tools such as configuration management systems, continuous integration and deployment pipelines, and testing frameworks. By automating routine tasks, teams can reduce errors, increase efficiency, and focus on more strategic initiatives.

Q: What is the importance of collaboration in a DevOps environment?

A: Collaboration is essential in a DevOps environment because it helps teams to work together more efficiently and effectively. By breaking down silos and fostering communication between development, operations, and other teams, DevOps practices can lead to faster, more reliable software delivery and deployment.

Q: How do you measure the success of a DevOps implementation?

A: The success of a DevOps implementation can be measured in a variety of ways, including deployment frequency, time-to-market, mean time to recovery (MTTR), and customer satisfaction. By monitoring and analyzing key performance indicators (KPIs), teams can identify areas for improvement and optimize their DevOps processes.

Q: What are some common challenges associated with implementing DevOps?

A: Some common challenges associated with implementing DevOps include cultural resistance to change, lack of collaboration and communication between teams, legacy systems and processes, and the need for specialized skills and training. Overcoming these challenges requires a commitment to continuous improvement and a willingness to adopt new tools, processes, and ways of working.

Q: What is DevOps?

A: DevOps is a methodology that combines software development (Dev) and IT operations (Ops) to achieve faster delivery of software applications while maintaining high quality and reliability. It involves a cultural shift towards collaboration, automation, and continuous improvement.

Q: What are the benefits of DevOps?

A: The benefits of DevOps include faster time to market, increased collaboration and communication between teams, improved efficiency and quality, better security, and higher customer satisfaction.

Q: What tools have you used for automation in your previous roles?

A: I have used several tools for automation, including Jenkins, Ansible, Chef, Puppet, Docker, Kubernetes, Git, and AWS CloudFormation.

Q: How do you ensure code quality in a DevOps environment?

A: In a DevOps environment, code quality is ensured through continuous integration and testing. This involves automating the build, test, and deployment processes and running automated tests at every stage of the pipeline. Code reviews, code analysis tools, and metrics monitoring are also used to maintain code quality.

Q: How do you handle conflicts between developers and operations teams?

A: Conflicts between developers and operations teams can be resolved through open communication, collaboration, and a shared understanding of goals and priorities. Regular meetings and cross-functional training can also help bridge the gap between the two teams.

Q: Can you explain the difference between continuous delivery and continuous deployment?

A: Continuous delivery is the practice of continuously delivering software applications to a production-like environment for testing and validation. Continuous deployment is the practice of automatically deploying software changes to production once they pass all tests in the continuous delivery pipeline.

Q: What is your experience with cloud computing?

A: I have experience with several cloud platforms, including Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). I have worked on cloud infrastructure as code, containerization, and serverless computing.

Q: How do you ensure security in a DevOps environment?

A: Security in a DevOps environment can be ensured through automated security testing, vulnerability scanning, and continuous monitoring. Security should be integrated into every stage of the pipeline, and access control and encryption should be implemented at all levels of the infrastructure.

Q: Can you walk me through your experience with containerization?

A: I have experience with containerization using Docker and Kubernetes. I have worked on creating Docker images, deploying containers, and managing container orchestration using Kubernetes.

Q: How do you handle downtime or failures in a production environment?

A: In a production environment, downtime or failures can be handled through monitoring, automated alerts, and incident response plans. Post-mortems should be conducted to identify the root cause of the issue and to implement measures to prevent similar incidents in the future.

Kubernetes interview questions

1. What is Kubernetes and why it is important?

Kubernetes is an open-source platform used to automate the deployment, scaling, and management of containerized applications. It is like a traffic controller for containerized applications. It ensures that these applications are running efficiently and reliably, by managing their deployment, scaling, and updating processes.

Kubernetes is important because it makes much easier to deploy and manage complex applications across different environments and infrastructures. By providing a consistent platform for containerized applications, Kubernetes allows developers to focus on building and improving their applications, rather than worrying about the underlying infrastructure. Additionally, Kubernetes helps organizations to achieve greater efficiency, scalability, and flexibility, which can result in significant cost savings and faster time-to-market.

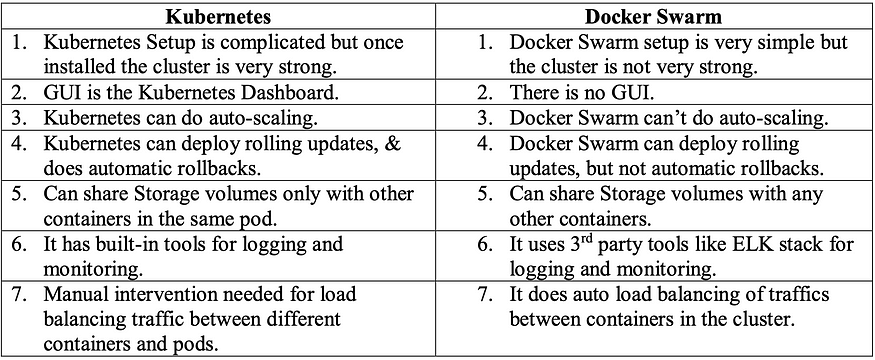

2. What is difference between docker swarm and Kubernetes?

3.How does Kubernetes handle network communication between containers?

Kubernetes defines a network model called the container network interface (CNI), but the actual implementation relies on network plugins. The network plugin is responsible for allocating internet protocol (IP) addresses to pods and enabling pods to communicate with each other within the Kubernetes cluster.

When a pod is created in Kubernetes, the CNI plugin is used to create a virtual network interface for the pod. Each container in the pod is then assigned its own unique IP address within the pod’s network namespace. This enables containers within the pod to communicate with each other via localhost, as if they were running on the same host.

To enable communication between pods, Kubernetes sets up a virtual network overlay using the selected network plugin. Each node in the cluster runs a network agent that communicates with other agents on other nodes to establish the overlay network. This enables communication between containers running in different pods, even if they are running on different nodes.

4. How does Kubernetes handle scaling of applications?

Kubernetes provides built-in mechanisms for scaling applications horizontally and vertically, allowing you to meet changing demands for your application.

Horizontal scaling, also known as scaling out, involves adding more instances of an application to handle increased traffic. Kubernetes can manage this automatically through the use of ReplicaSet,which ensure that a specified number of identical pods are running at all times. We can configure the ReplicaSet to automatically create additional replicas when demand increases, and scale back down when demand decreases.

Vertical scaling, also known as scaling up, involves increasing the resources (such as CPU or memory) available to an existing instance of an application. Kubernetes can handle this through the use of a feature called the Horizontal Pod Autoscaler (HPA), which automatically adjusts the number of replicas based on CPU or memory utilization. When the utilization exceeds a specified threshold, the HPA will increase the number of replicas, and when it falls below a certain threshold, it will decrease the number of replicas.

5.What is a Kubernetes Deployment and how does it differ from a ReplicaSet?

In Kubernetes, a deployment is a higher-level object that provides declarative updates for replica sets and pods. A deployment is responsible for managing the desired state of a set of pods, ensuring that the current state matches the desired state.

A deployment creates and manages replica sets, which in turn manage pods. A replica set ensures that a specified number of replicas of a pod are running at any given time. If a pod fails or is deleted, the replica set replaces it with a new pod.

In short, deployment is a higher-level object that manages the desired state of a set of pods, while a replica set is a low-level object that manages the scaling and lifecycle of pods. Deployments provide declarative updates, rolling updates, and rollbacks, while replica sets are primarily used for scaling and ensuring the desired number of replicas of a pod are running.

6.Can you explain the concept of rolling updates in Kubernetes?

In Kubernetes, a rolling update is a strategy for updating a deployment or a replica set without causing any downtime or interruption to the application. It works as follows:

- First, Kubernetes creates a new version of the desired deployment or replica set.

- Next, Kubernetes gradually replaces the old pods with new ones, one at a time, until all the pods have been updated.

- During the update process, both old and new pods are running simultaneously. This ensures that the application remains available throughout the update process.

- Once all the new pods are running, Kubernetes deletes the old pods.

This gradual replacement process is a way to update an application without taking it offline or causing any disruption to users. Rolling updates also provide the ability to roll back to the previous version in case something goes wrong during the update process.

Overall, rolling updates are a powerful and essential feature of Kubernetes that help keep applications up-to-date and available to users.

7.How does Kubernetes handle network security and access control?

Kubernetes has a several built-in features for managing network security and access control. Some of these features are

1.Network policies: Kubernetes allows administrators to define Network Policies that specify rules for traffic flow within the cluster. These policies can be used to restrict traffic between pods, namespaces, or even entire clusters, based on IP addresses, ports, or other attributes.

2.Role-Based Access Control (RBAC): Kubernetes supports RBAC, which enables administrators to define granular permissions for users and services based on their roles and responsibilities. This feature allows administrators to control access to Kubernetes resources, including pods, nodes, and services.

3.Container Network Interface (CNI): Kubernetes supports CNI, which is a plugin-based interface that allows third-party network providers to integrate with the cluster. This feature allows administrators to use their preferred networking solution to provide additional network security and access control.

8.Can you give an example of how Kubernetes can be used to deploy a highly available application?

Let’s say you have a web application that needs to be highly available, meaning it can’t go down if one or more of its components fail. You can use Kubernetes to deploy this application in a highly available manner by doing the following:

1.Create a Kubernetes cluster with multiple nodes (virtual or physical machines) that are spread across multiple availability zones or regions.

2.Create a Kubernetes Deployment for your application, which specifies how many replicas (copies) of your application should be running at any given time.

3.Create a Kubernetes Service for your application, which provides a stable IP address and DNS name for clients to access your application.

4.Use a Kubernetes Ingress to route traffic to your application’s Service, and configure the Ingress to load-balance traffic across all the replicas of your application.

By following these steps, Kubernetes will automatically monitor your application and ensure that the specified number of replicas are always running, even if one or more nodes fail. Clients will be able to access your application through the stable IP address and DNS name provided by the Service, and the Ingress will distribute traffic across all available replicas to ensure that the application remains highly available.

9. What is namespace is Kubernetes? Which namespace any pod takes if we don’t specify any namespace?

Namespace can be recognised as a virtual cluster inside your Kubernetes cluster. We can have multiple namespaces inside a single Kubernetes cluster, and they are all logically isolated from each other. They can help us and our teams with organization, security, and even performance!

There are two types of Kubernetes namespaces: Kubernetes system namespaces and custom namespaces.

If we don’t specify a namespace for a pod, it will be created in the default namespace by default. This is the namespace that Kubernetes creates automatically when we set up a cluster, and it is used for objects that do not have a specific namespace specified.

Here are four default namespaces Kubernetes creates automatically

default

Kube-system

Kube-public

Kube-node-lease

10. How ingress helps in Kubernetes?

Ingress is a Kubernetes resource that provides a way to manage incoming traffic to your cluster. It acts as a layer 7 (application layer) load balancer and provides advanced routing and path-based rules for HTTP and HTTPS traffic.

Here are a few ways that Ingress helps in Kubernetes:

1.Load balancing: Ingress can be used to distribute traffic to different services in the cluster, based on the URL or hostname specified in the incoming request. This helps to balance the load on the different services and ensure that the traffic is routed to the correct backend service.

2.Routing: Ingress can route traffic to different services based on the URL path or hostname specified in the request. This makes it easy to manage multiple services running on the same cluster.

3.Access control: Ingress can be used to restrict access to services based on IP addresses, HTTP headers, or other criteria. This helps to improve the security of the cluster by preventing

unauthorized access to the services.

4.TLS termination: Ingress can terminate SSL/TLS encryption, allowing you to use a single certificate for multiple services and domains.

11. Explain different types of services in Kubernetes?

There are four types of services in Kubernetes:

1. ClusterIP (default): ClusterIP service is responsible for providing a stable IP address for a set of pods in the cluster. This IP address is only accessible within the cluster, and it allows other services and pods to access the pods that belong to the ClusterIP service.

2.NodePort: This type of service exposes a set of pods to the outside world. A NodePort service maps a port on each node in the cluster to a specific port on the pod. This service type is used when you need to access a service from outside the cluster or from a different namespace within the same cluster.

3.LoadBalancer: This type of service is used to expose a set of pods to the outside world through a load balancer. The LoadBalancer service type is used in cloud environments, and it is responsible for automatically creating a cloud load balancer and configuring it to route traffic to the pods in the service.

ExternalName: This type of service maps a service to an external DNS name, allowing you to use the DNS name to access the service instead of the IP address. This service type is useful when you have a service running outside of the cluster, and you want to access it from within the cluster.

12. Can you explain the concept of self-healing in Kubernetes and give examples of how it works?

Self-healing is a feature provided by the Kubernetes open-source system. If a containerized app or an application component fails or goes down, Kubernetes re-deploys it to retain the desired state. Kubernetes provides self-healing by default.

Ex: Suppose we have a web application deployed in Kubernetes with 2 replicas. Each replica runs in its own container. Kubernetes monitors the health of each container by sending periodic requests to the application’s endpoints.

If one of the replicas fails, Kubernetes detects it by monitoring the responses to the health check requests. It then terminates the failed container and starts a new one to replace it, ensuring that the total number of replicas is always maintained. The replacement container is created from the same image and configuration as the original, which helps to ensure consistency across replicas.

Kubernetes also supports rolling updates, which allow you to update your application without causing downtime. When you update your application, Kubernetes creates a new set of replicas with the updated code and configuration. It then gradually replaces the old replicas with the new ones, ensuring that the application remains available during the update process.

13. How does Kubernetes handle storage management for containers?

Kubernetes provides various ways to manage storage for containers, including: volumes, persistent volumes, and storage classes.

1.Volumes: A volume is a directory accessible to containers in a pod. Volumes are used to storedata that needs to persist beyond the lifetime of a container. Kubernetes supports several types of volumes, such as emptyDir, hostPath, configMap, secret, and more. Each type of volume has its own properties and behaviour.

2.Persistent Volumes: Persistent volumes (PVs) are storage resources provisioned by an administrator that can be used by a pod. A PV is a piece of storage in the cluster that has been provisioned by an administrator. It is not tied to any specific pod, and can be used by any pod that requests it. PVs can be dynamically provisioned by a storage class or statically provisioned by an administrator.

3.Storage Classes: A storage class is used to define the types of storage that can be dynamically provisioned in the cluster. Storage classes provide a way to abstract the underlying storage infrastructure, making it easier to manage and use storage resources in a consistent way. A storage class defines the provisioner that will be used to provision the storage, along with other parameters like the access mode, reclaim policy, and more.

14. How does the NodePort service work?

NodePort service in Kubernetes allows you to expose a container running in a Kubernetes cluster to the outside world by mapping a specific port of the Kubernetes node to the container’s port.

First, you create a NodePort service by defining it in a Kubernetes manifest file. In this manifest file, you specify the target port on which your container is listening and the port on which you want to expose the service to the outside world.

When you create the service, Kubernetes assigns a random port in the range of 30000–32767 to the service. This port is the “NodePort” that gives the service its name.

Kubernetes then creates a mapping between the NodePort and the target port of your container.

When you want to access your container from outside the Kubernetes cluster, you can use the IP address of any node in the cluster along with the NodePort to access the service. For example, if the NodePort assigned to your service is 32000 and you have a node with IP address 10.0.0.100, you can access the service at http://10.0.0.100:32000.

The node that you use to access the service will route the traffic to the correct pod based on the mapping that Kubernetes created between the NodePort and the target port of your container.

15. What is a multinode cluster and single-node cluster in Kubernetes?

In Kubernetes, a node is a worker machine that runs containerized applications. A cluster is a group of nodes that work together to run and manage these applications.

A single-node cluster in Kubernetes consists of only one node, which means that all the applications and services are running on the same node. This configuration is useful for testing and development purposes, but it is not recommended for production environments.

On the other hand, a multinode cluster consists of multiple nodes that work together to distribute the workload and provide high availability. In a multinode cluster, if one node fails, the applications can be automatically moved to another node, ensuring that the applications remain available.

16. Difference between create and apply in Kubernetes?

In Kubernetes, “create” and “apply” are two different commands used to manage Kubernetes resources.

“Create” command:

The “create” command is used to create a new Kubernetes resource from a YAML or JSON file. When you create a resource using the “create” command, Kubernetes will create a new resource object based on the specification provided in the file. If a resource with the same name already exists, the “create” command will return an error.

“Apply” command:

The “apply” command is used to create or update a Kubernetes resource based on a YAML or JSON file. When you apply a resource using the “apply” command, Kubernetes will update the existing resource object if it already exists, or create a new resource object if it doesn’t exist.

The main difference between the two commands is that “create” always creates a new resource, while “apply” can create or update an existing resource.

💥💥250 Practice Questions For Terraform Associate Certification💥💥

The Terraform Associate certification is for Cloud Engineers specializing in operations, IT, or developers who know the basic concepts and skills associated with open source HashiCorp Terraform. Candidates will be best prepared for this exam if they have professional experience using Terraform in production, but performing the exam objectives in a personal demo environment may also be sufficient.👈👈👈

Since this exam is multiple-choice, multiple-answer, and fill in the banks' questions, we need a lot of practice before the exam. This article helps you understand, practice, and get you ready for the exam. All the questions and answers are taken straight from their documentation. These are only practice questions.

We are not going to discuss any concepts here, rather, I just want to create a bunch of practice questions for this exam based on the curriculum provided here.

- Understand infrastructure as code (IaC) concepts

- Understand Terraform’s purpose (vs other IaC)

- Understand Terraform basics

- Use the Terraform CLI (outside of core workflow)

- Interact with Terraform modules

- Navigate Terraform workflow

- Implement and maintain state

- Read, generate, and modify the configuration

- Understand Terraform Cloud and Enterprise capabilities

Understand infrastructure as code (IaC) concepts

Practice questions based on these concepts

- Explain what IaC is

- Describe the advantages of IaC patterns

1. What is Infrastructure as Code?

You write and execute the code to define, deploy, update, and destroy your infrastructure2. What are the benefits of IaC?

a. Automation

We can bring up the servers with one script and scale up and down based on our load with the same script.b. Reusability of the code

We can reuse the same codec. Versioning

We can check it into version control and we get versioning. Now we can see an incremental history of who changed what, how is our infrastructure actually defined at any given point of time, and wehave this transparency of documentationIaC makes changes idempotent, consistent, repeatable, and predictable.

3. How using IaC make it easy to provision infrastructure?

IaC makes it easy to provision and apply infrastructure configurations, saving time. It standardizes workflows across different infrastructure providers (e.g., VMware, AWS, Azure, GCP, etc.) by using a common syntax across all of them.4. What is Ideompodent in terms of IaC?

The idempotent characteristic provided by IaC tools ensures that, even if the same code is applied multiple times, the result remains the same.5. What are Day 0 and Day 1 activities?

IaC can be applied throughout the lifecycle, both on the initial build, as well as throughout the life of the infrastructure. Commonly, these are referred to as Day 0 and Day 1 activities. “Day 0” code provisions and configures your initial infrastructure.“Day 1” refers to OS and application configurations you apply after you’ve initially built your infrastructure.

6. What are the use cases of Terraform?

Heroku App Setup

Multi-Tier Applications

Self-Service Clusters

Software Demos

Disposable Environments

Software Defined Networking

Resource Schedulers

Multi-Cloud Deploymenthttps://www.terraform.io/intro/use-cases.html

7. What are the advantages of Terraform?

Platform Agnostic

State Management

Operator Confidencehttps://learn.hashicorp.com/terraform/getting-started/intro

8. Where do you describe all the components or your entire datacenter so that Terraform provision those?

Configuration files ends with *.tf9. How can Terraform build infrastructure so efficiently?

Terraform builds a graph of all your resources, and parallelizes the creation and modification of any non-dependent resources. Because of this, Terraform builds infrastructure as efficiently as possible, and operators get insight into dependencies in their infrastructure.Understand Terraform’s purpose (vs other IaC)

Practice questions based on these concepts

- Explain multi-cloud and provider-agnostic benefits

- Explain the benefits of state

10. What is multi-cloud deployment?

Provisoning your infrastrcutire into multiple cloud providers to increase fault-tolerance of your applications.11. How multi-cloud deployment is useful?

By using only a single region or cloud provider, fault tolerance is limited by the availability of that provider. Having a multi-cloud deployment allows for more graceful recovery of the loss of a region or entire provider.

12. What is cloud-agnostic in terms of provisioning tools?

cloud-agnostic and allows a single configuration to be used to manage multiple providers, and to even handle cross-cloud dependencies.13. Is Terraform cloud-agostic?

Yes14. What is the use of terraform being cloud-agnostic?

It simplifies management and orchestration, helping operators build large-scale multi-cloud infrastructures.15. What is the Terraform State?

Every time you run Terraform, it records information about what infrastructure it created in a Terraform state file. By default, when you run Terraform in the folder /some/folder, Terraform creates the file /some/folder/terraform.tfstate. This file contains a custom JSON format that records a mapping from the Terraform resources in your configuration files to the representation of those resources in the real world.

16. What is the purpose of the Terraform State?

Mapping to the Real World

Terraform requires some sort of database to map Terraform config to the real world because you can't find the same functionality in every cloud provider. You need to have some kind of mechanism to be cloud-agnosticMetadata

Terraform must also track metadata such as resource dependencies, pointer to the provider configuration that was most recently used with the resource in situations where multiple aliased providers are present.Performance

When running a terraform plan, Terraform must know the current state of resources in order to effectively determine the changes that it needs to make to reach your desired configuration.For larger infrastructures, querying every resource is too slow. Many cloud providers do not provide APIs to query multiple resources at once, and the round trip time for each resource is hundreds of milliseconds. So, Terraform stores a cache of the attribute values for all resources in the state. This is the most optional feature of Terraform state and is done only as a performance improvement.Syncing

When two people works on the same file and doing some changes to the infrastructure. Its very important for everyone to be working with the same state so that operations will be applied to the same remote objects.https://www.terraform.io/docs/state/purpose.html

17. What is the name of the terraform state file?

terraform.tfstateUnderstand Terraform basics

Practice questions based on these concepts

- Handle Terraform and provider installation and versioning

- Describe the plug-in based architecture

- Demonstrate using multiple providers

- Describe how Terraform finds and fetches providers

- Explain when to use and not use provisioners and when to use local-exec or remote-exec

18. How do you install terraform on different OS?

// Mac OS

brew install terraform// Windows

choco install terraformhttps://learn.hashicorp.com/terraform/getting-started/install

19. How do you manually install terraform?

step 1: Download the zip fille

step 2: mv ~/Downloads/terraform /usr/local/bin/terraform20. Where do you put terraform configurations so that you can configure some behaviors of Terraform itself?

The special terraform configuration block type is used to configure some behaviors of Terraform itself, such as requiring a minimum Terraform version to apply your configuration.terraform {

# ...

}

21. Only constants are allowed inside the terraform block. Is this correct?

YesWithin a terraform block, only constant values can be used; arguments may not refer to named objects such as resources, input variables, etc, and may not use any of the Terraform language built-in functions.

22. What are the Providers?

A provider is a plugin that Terraform uses to translate the API interactions with the service. A provider is responsible for understanding API interactions and exposing resources. Because Terraform can interact with any API, you can represent almost any infrastructure type as a resource in Terraform.https://www.terraform.io/docs/configuration/providers.html

23. How do you configure a Provider?

provider "google" {

project = "acme-app"

region = "us-central1"

}The name given in the block header ("google" in this example) is the name of the provider to configure. Terraform associates each resource type with a provider by taking the first word of the resource type name (separated by underscores), and so the "google" provider is assumed to be the provider for the resource type name google_compute_instance.The body of the block (between { and }) contains configuration arguments for the provider itself. Most arguments in this section are specified by the provider itself; in this example both project and region are specific to the google provider.

24. What are the meta-arguments that are defined by Terraform itself and available for all provider blocks?

version: Constraining the allowed provider versionsalias: using the same provider with different configurations for different resources

25. What is Provider initialization and why do we need?

Each time a new provider is added to configuration -- either explicitly via a provider block or by adding a resource from that provider -- Terraform must initialize the provider before it can be used. Initialization downloads and installs the provider's plugin so that it can later be executed.

26. How do you initialize any Provider?

Provider initialization is one of the actions of terraform init. Running this command will download and initialize any providers that are not already initialized.27. When you run terraform init command, all the providers are installed in the current working directory. Is this true?

Providers downloaded by terraform init are only installed for the current working directory; other working directories can have their own installed provider versions.Note that terraform init cannot automatically download providers that are not distributed by HashiCorp. See Third-party Plugins below for installation instructions.

28. How do you constrain the provider version?

To constrain the provider version as suggested, add a required_providers block inside a terraform block:terraform {

required_providers {

aws = "~> 1.0"

}

}

29. How do you upgrade to the latest acceptable version of the provider?

terraform init --upgradeIt upgrade to the latest acceptable version of each provider

This command also upgrades to the latest versions of all Terraform modules.

30. How many ways you can configure provider versions?

1. With required_providers blocks under terraform blockterraform {

required_providers {

aws = "~> 1.0"

}

}2. Provider version constraints can also be specified using a version argument within a provider blockprovider {

version= "1.0"

}

31. How do you configure Multiple Provider Instances?

aliasYou can optionally define multiple configurations for the same provider, and select which one to use on a per-resource or per-module basis.

32. Why do we need Multiple Provider instances?

Some of the example scenarios:a. multiple regions for a cloud platform

b. targeting multiple Docker hosts

c. multiple Consul hosts, etc.

33. How do we define multiple Provider configurations?

To include multiple configurations for a given provider, include multiple provider blocks with the same provider name, but set the alias meta-argument to an alias name to use for each additional configuration.# The default provider configuration

provider "aws" {

region = "us-east-1"

}

# Additional provider configuration for west coast region

provider "aws" {

alias = "west"

region = "us-west-2"

}

34. How do you select alternate providers?

By default, resources use a default provider configuration inferred from the first word of the resource type name. For example, a resource of type aws_instance uses the default (un-aliased) aws provider configuration unless otherwise stated.resource "aws_instance" "foo" {

provider = aws.west

# ...

}

35. What is the location of the user plugins directory?

Windows %APPDATA%\terraform.d\plugins

All other systems ~/.terraform.d/plugins36. Third-party plugins should be manually installed. Is that true?

True37. The command terraform init cannot install third-party plugins? True or false?

TrueInstall third-party providers by placing their plugin executables in the user plugins directory. The user plugins directory is in one of the following locations, depending on the host operating systemOnce a plugin is installed, terraform init can initialize it normally. You must run this command from the directory where the configuration files are located.

38. What is the naming scheme for provider plugins?

terraform-provider-<NAME>_vX.Y.Z39. What is the CLI configuration File?

The CLI configuration file configures per-user settings for CLI behaviors, which apply across all Terraform working directories.It is named either .terraformrc or terraform.rc

40. Where is the location of the CLI configuration File?

On Windows, the file must be named named terraform.rc and placed in the relevant user's %APPDATA% directory.On all other systems, the file must be named .terraformrc (note the leading period) and placed directly in the home directory of the relevant user.The location of the Terraform CLI configuration file can also be specified using the TF_CLI_CONFIG_FILE environment variable.

41. What is Provider Plugin Cache?

By default, terraform init downloads plugins into a subdirectory of the working directory so that each working directory is self-contained. As a consequence, if you have multiple configurations that use the same provider then a separate copy of its plugin will be downloaded for each configuration.Given that provider plugins can be quite large (on the order of hundreds of megabytes), this default behavior can be inconvenient for those with slow or metered Internet connections. Therefore Terraform optionally allows the use of a local directory as a shared plugin cache, which then allows each distinct plugin binary to be downloaded only once.

42. How do you enable Provider Plugin Cache?

To enable the plugin cache, use the plugin_cache_dir setting in the CLI configuration file.plugin_cache_dir = "$HOME/.terraform.d/plugin-cache"Alternatively, the TF_PLUGIN_CACHE_DIR environment variable can be used to enable caching or to override an existing cache directory within a particular shell session:

43. When you are using plugin cache you end up growing cache directory with different versions. Whose responsibility to clean it?

UserTerraform will never itself delete a plugin from the plugin cache once it's been placed there. Over time, as plugins are upgraded, the cache directory may grow to contain several unused versions which must be manually deleted.

44. Why do we need to initialize the directory?

When you create a new configuration — or check out an existing configuration from version control — you need to initialize the directory// Exampleprovider "aws" {

profile = "default"

region = "us-east-1"

}

resource "aws_instance" "example" {

ami = "ami-2757f631"

instance_type = "t2.micro"

}Initializing a configuration directory downloads and installs providers used in the configuration, which in this case is the aws provider. Subsequent commands will use local settings and data during initialization.

45. What is the command to initialize the directory?

terraform init46. If different teams are working on the same configuration. How do you make files to have consistent formatting?

terraform fmtThis command automatically updates configurations in the current directory for easy readability and consistency.

47. If different teams are working on the same configuration. How do you make files to have syntactically valid and internally consistent?

terraform validateThis command will check and report errors within modules, attribute names, and value types.Validate your configuration. If your configuration is valid, Terraform will return a success message.

48. What is the command to create infrastructure?

terraform apply49. What is the command to show the execution plan and not apply?

terraform plan50. How do you inspect the current state of the infrastructure applied?

terraform showWhen you applied your configuration, Terraform wrote data into a file called terraform.tfstate. This file now contains the IDs and properties of the resources Terraform created so that it can manage or destroy those resources going forward.

51. If your state file is too big and you want to list the resources from your state. What is the command?

terraform state listhttps://learn.hashicorp.com/terraform/getting-started/build#manually-managing-state

52. What is plug-in based architecture?

Defining additional features as plugins to your core platform or core application. This provides extensibility, flexibility and isolation53. What are Provisioners?

If you need to do some initial setup on your instances, then provisioners let you upload files, run shell scripts, or install and trigger other software like configuration management tools, etc.54. How do you define provisioners?

resource "aws_instance" "example" {

ami = "ami-b374d5a5"

instance_type = "t2.micro"

provisioner "local-exec" {

command = "echo hello > hello.txt"

}

}Provisioner block within the resource block. Multiple provisioner blocks can be added to define multiple provisioning steps. Terraform supports multiple provisionershttps://learn.hashicorp.com/terraform/getting-started/provision

55. What are the types of provisioners?

local-exec

remote-exec56. What is a local-exec provisioner and when do we use it?

The local-exec provisioner executing a command locally on your machine running Terraform.We use this when we need to do something on our local machine without needing any external URL

57. What is a remote-exec provisioner and when do we use it?

Another useful provisioner is remote-exec which invokes a script on a remote resource after it is created. This can be used to run a configuration management tool, bootstrap into a cluster, etc.

58. Are provisioners runs only when the resource is created or destroyed?

Provisioners are only run when a resource is created or destroyed. Provisioners that are run while destroying are Destroy provisioners. They are not a replacement for configuration management and changing the software of an already-running server, and are instead just meant as a way to bootstrap a server.

59. What do we need to use a remote-exec?

In order to use a remote-exec provisioner, you must choose an ssh or winrm connection in the form of a connection block within the provisioner.Here is an exampleprovider "aws" {

profile = "default"

region = "us-west-2"

}resource "aws_key_pair" "example" {

key_name = "examplekey"

public_key = file("~/.ssh/terraform.pub")

}resource "aws_instance" "example" {

key_name = aws_key_pair.example.key_name

ami = "ami-04590e7389a6e577c"

instance_type = "t2.micro"connection {

type = "ssh"

user = "ec2-user"

private_key = file("~/.ssh/terraform")

host = self.public_ip

}provisioner "remote-exec" {

inline = [

"sudo amazon-linux-extras enable nginx1.12",

"sudo yum -y install nginx",

"sudo systemctl start nginx"

]

}

}

60. When terraform mark the resources are tainted?

If a resource successfully creates but fails during provisioning, Terraform will error and mark the resource as "tainted".A resource that is tainted has been physically created, but can't be considered safe to use since provisioning failed.

61. You applied the infrastructure with terraform apply and you have some tainted resources. You run an execution plan now what happens to those tainted resources?

When you generate your next execution plan, Terraform will not attempt to restart provisioning on the same resource because it isn't guaranteed to be safe. Instead, Terraform will remove any tainted resources and create new resources, attempting to provision them again after creation.https://learn.hashicorp.com/terraform/getting-started/provision

62. Terraform also does not automatically roll back and destroy the resource during the apply when the failure happens. Why?

Terraform also does not automatically roll back and destroy the resource during the apply when the failure happens, because that would go against the execution plan: the execution plan would've said a resource will be created, but does not say it will ever be deleted. If you create an execution plan with a tainted resource, however, the plan will clearly state that the resource will be destroyed because it is tainted.https://learn.hashicorp.com/terraform/getting-started/provision

63. How do you manually taint a resource?

terraform taint resource.id64. Does the taint command modify the infrastructure?

terraform taint resource.idThis command will not modify infrastructure, but does modify the state file in order to mark a resource as tainted. Once a resource is marked as tainted, the next plan will show that the resource will be destroyed and recreated and the next apply will implement this change.

65. By default, provisioners that fail will also cause the Terraform apply itself to fail. Is this true?

True66. By default, provisioners that fail will also cause the Terraform apply itself to fail. How do you change this?

The on_failure setting can be used to change this. The allowed values are:continue: Ignore the error and continue with creation or destruction.fial: Raise an error and stop applying (the default behavior). If this is a creation provisioner, taint the resource.

// Exampleresource "aws_instance" "web" {

# ...

provisioner "local-exec" {

command = "echo The server's IP address is ${self.private_ip}"

on_failure = "continue"

}

}

67. How do you define destroy provisioner and give an example?

You can define destroy provisioner with the parameter whenprovisioner "remote-exec" {

when = "destroy"

# <...snip...>

}

68. How do you apply constraints for the provider versions?

The required_providers setting is a map specifying a version constraint for each provider required by your configuration.terraform {

required_providers {

aws = ">= 2.7.0"

}

}

69. What should you use to set both a lower and upper bound on versions for each provider?

~>terraform {

required_providers {

aws = "~> 2.7.0"

}

}

70. How do you try experimental features?

In releases where experimental features are available, you can enable them on a per-module basis by setting the experiments argument inside a terraform block:terraform {

experiments = [example]

}

71. When does the terraform does not recommend using provisions?

Passing data into virtual machines and other compute resourceshttps://www.terraform.io/docs/provisioners/#passing-data-into-virtual-machines-and-other-compute-resourcesRunning configuration management softwarehttps://www.terraform.io/docs/provisioners/#running-configuration-management-software

72. Expressions in provisioner blocks cannot refer to their parent resource by name. Is this true?

TrueThe self object represents the provisioner's parent resource, and has all of that resource's attributes. For example, use self.public_ip to reference an aws_instance's public_ip attribute.

73. What does this symbol version = “~> 1.0” mean when defining versions?

Any version more than 1.0 and less than 2.074. Terraform supports both cloud and on-premises infrastructure platforms. Is this true?

True75. Terraform assumes an empty default configuration for any provider that is not explicitly configured. A provider block can be empty. Is this true?

True76. How do you configure the required version of Terraform CLI can be used with your configuration?

The required_version setting can be used to constrain which versions of the Terraform CLI can be used with your configuration. If the running version of Terraform doesn't match the constraints specified, Terraform will produce an error and exit without taking any further actions.77. Terraform CLI versions and provider versions are independent of each other. Is this true?

True78. You are configuring aws provider and it is always recommended to hard code aws credentials in *.tf files. Is this true?

FalseHashiCorp recommends that you never hard-code credentials into *.tf configuration files. We are explicitly defining the default AWS config profile here to illustrate how Terraform should access sensitive credentials.If you leave out your AWS credentials, Terraform will automatically search for saved API credentials (for example, in ~/.aws/credentials) or IAM instance profile credentials. This is cleaner when .tf files are checked into source control or if there is more than one admin user

79. You are provisioning the infrastructure with the command terraform apply and you noticed one of the resources failed. How do you remove that resource without affecting the whole infrastructure?

You can taint the resource ans the next apply will destroy the resourceterraform taint <resource.id>

Use the Terraform CLI (outside of core workflow)

Practice questions based on these concepts

- Given a scenario: choose when to use

terraform fmtto format code - Given a scenario: choose when to use

terraform taintto taint Terraform resources - Given a scenario: choose when to use

terraform importto import existing infrastructure into your Terraform state - Given a scenario: choose when to use

terraform workspaceto create workspaces - Given a scenario: choose when to use

terraform stateto view Terraform state - Given a scenario: choose when to enable verbose logging and what the outcome/value is

80. What is command fmt?

The terraform fmt command is used to rewrite Terraform configuration files to a canonical format and style. This command applies a subset of the Terraform language style conventions, along with other minor adjustments for readability.81. What is the recommended approach after upgrading terraform?

The canonical format may change in minor ways between Terraform versions, so after upgrading Terraform we recommend to proactively run terraform fmt on your modules along with any other changes you are making to adopt the new version.82. What is the command usage?

terraform fmt [options] [DIR]83. By default, fmt scans the current directory for configuration files. Is this true?

TrueBy default, fmt scans the current directory for configuration files. If the dir argument is provided then it will scan that given directory instead. If dir is a single dash (-) then fmt will read from standard input (STDIN).

84. You are formatting the configuration files and what is the flag you should use to see the differences?

terraform fmt -diff85. You are formatting the configuration files and what is the flag you should use to process the subdirectories as well?

terraform fmt -recursive86. You are formatting configuration files in a lot of directories and you don’t want to see the list of file changes. What is the flag that you should use?

terraform fmt -list=false87. What is the command taint?

The terraform taint command manually marks a Terraform-managed resource as tainted, forcing it to be destroyed and recreated on the next apply.This command will not modify infrastructure, but does modify the state file in order to mark a resource as tainted. Once a resource is marked as tainted, the next plan will show that the resource will be destroyed and recreated and the next apply will implement this change.

88. What is the command usage?

terraform taint [options] addressThe address argument is the address of the resource to mark as tainted. The address is in the resource address syntax syntax

89. When you are tainting a resource terraform reads the default state file terraform.tfstate. What is the flag you should use to read from a different path?

terraform taint -state=path90. Give an example of tainting a single resource?

terraform taint aws_security_group.allow_allThe resource aws_security_group.allow_all in the module root has been marked as tainted.

91. Give an example of tainting a resource within a module?

terraform taint "module.couchbase.aws_instance.cb_node[9]"Resource instance module.couchbase.aws_instance.cb_node[9] has been marked as tainted.

92. What is the command import?

The terraform import command is used to import existing resources into Terraform.Terraform is able to import existing infrastructure. This allows you take resources you've created by some other means and bring it under Terraform management.This is a great way to slowly transition infrastructure to Terraform, or to be able to be confident that you can use Terraform in the future if it potentially doesn't support every feature you need today.

93. What is the command import usage?

terraform import [options] ADDRESS ID94. What is the default workspace name?

default95. What are workspaces?

Each Terraform configuration has an associated backend that defines how operations are executed and where persistent data such as the Terraform state are stored.The persistent data stored in the backend belongs to a workspace. Initially the backend has only one workspace, called "default", and thus there is only one Terraform state associated with that configuration.Certain backends support multiple named workspaces, allowing multiple states to be associated with a single configuration.

96. What is the command to list the workspaces?

terraform workspace list97. What is the command to create a new workspace?

terraform workspace new <name>98. What is the command to show the current workspace?

terraform workspace show99. What is the command to switch the workspace?

terraform workspace select <workspace name>100. What is the command to delete the workspace?

terraform workspace delete <workspace name>101. Can you delete the default workspace?

No. You can't ever delete default workspace102. You are working on the different workspaces and you want to use a different number of instances based on the workspace. How do you achieve that?

resource "aws_instance" "example" {

count = "${terraform.workspace == "default" ? 5 : 1}"

# ... other arguments

}103. You are working on the different workspaces and you want to use tags based on the workspace. How do you achieve that?

resource "aws_instance" "example" {

tags = {

Name = "web - ${terraform.workspace}"

}

# ... other arguments

}104. You want to create a parallel, distinct copy of a set of infrastructure in order to test a set of changes before modifying the main production infrastructure. How do you achieve that?

Workspaces105. What is the command state?

The terraform state command is used for advanced state management. As your Terraform usage becomes more advanced, there are some cases where you may need to modify the Terraform state. Rather than modify the state directly, the terraform state commands can be used in many cases instead.https://www.terraform.io/docs/commands/state/index.html

106. What is the command usage?

terraform state <subcommand> [options] [args]107. You are working on terraform files and you want to list all the resources. What is the command you should use?

terraform state list108. How do you list the resources for the given name?

terraform state list <resource name>109. What is the command that shows the attributes of a single resource in the state file?

terraform state show 'resource name'110. How do you do debugging terraform?

Terraform has detailed logs which can be enabled by setting the TF_LOG environment variable to any value. This will cause detailed logs to appear on stderr.You can set TF_LOG to one of the log levels TRACE, DEBUG, INFO, WARN or ERROR to change the verbosity of the logs. TRACE is the most verbose and it is the default if TF_LOG is set to something other than a log level name.To persist logged output you can set TF_LOG_PATH in order to force the log to always be appended to a specific file when logging is enabled. Note that even when TF_LOG_PATH is set, TF_LOG must be set in order for any logging to be enabled.https://www.terraform.io/docs/internals/debugging.html

111. If terraform crashes where should you see the logs?

crash.logIf Terraform ever crashes (a "panic" in the Go runtime), it saves a log file with the debug logs from the session as well as the panic message and backtrace to crash.log.https://www.terraform.io/docs/internals/debugging.html

112. What is the first thing you should do when the terraform crashes?

panic messageThe most interesting part of a crash log is the panic message itself and the backtrace immediately following. So the first thing to do is to search the file for panichttps://www.terraform.io/docs/internals/debugging.html

113. You are building infrastructure for different environments for example test and dev. How do you maintain separate states?

There are two primary methods to separate state between environments:directories

workspaces

114. What is the difference between directory-separated and workspace-separated environments?

Directory separated environments rely on duplicate Terraform code, which may be useful if your deployments need differ, for example to test infrastructure changes in development. But they can run the risk of creating drift between the environments over time.Workspace-separated environments use the same Terraform code but have different state files, which is useful if you want your environments to stay as similar to each other as possible, for example if you are providing development infrastructure to a team that wants to simulate running in production.

115. What is the command to pull the remote state?

terraform state pullThis command will download the state from its current location and output the raw format to stdout.https://www.terraform.io/docs/commands/state/pull.html

116. What is the command is used manually to upload a local state file to a remote state

terraform state pushThe terraform state push command is used to manually upload a local state file to remote state. This command also works with local state.https://www.terraform.io/docs/commands/state/push.html

117. The command terraform taint modifies the state file and doesn’t modify the infrastructure. Is this true?

TrueThis command will not modify infrastructure, but does modify the state file in order to mark a resource as tainted. Once a resource is marked as tainted, the next plan will show that the resource will be destroyed and recreated and the next apply will implement this change.

118. Your team has decided to use terraform in your company and you have existing infrastructure. How do you migrate your existing resources to terraform and start using it?

You should use terraform import and modify the infrastrcuture in the terraform files and do the terraform workflow (init, plan, apply)119. When you are working with the workspaces how do you access the current workspace in the configuration files?

${terraform.workspace}120. When you are using workspaces where does the Terraform save the state file for the local state?

terraform.tfstate.dFor local state, Terraform stores the workspace states in a directory called terraform.tfstate.d.

121. When you are using workspaces where does the Terraform save the state file for the remote state?

For remote state, the workspaces are stored directly in the configured backend.122. How do you remove items from the Terraform state?

terraform state rm 'packet_device.worker'The terraform state rm command is used to remove items from the Terraform state. This command can remove single resources, single instances of a resource, entire modules, and more.https://www.terraform.io/docs/commands/state/rm.html

123. How do you move the state from one source to another?

terraform state mv 'module.app' 'module.parent.module.app'The terraform state mv command is used to move items in a Terraform state. This command can move single resources, single instances of a resource, entire modules, and more. This command can also move items to a completely different state file, enabling efficient refactoring.https://www.terraform.io/docs/commands/state/mv.html

124. How do you rename a resource in the terraform state file?

terraform state mv 'packet_device.worker' 'packet_device.helper'The above example renames the packet_device resource named worker to helper:

Interact with Terraform modules

Practice questions based on these concepts

- Contrast module source options

- Interact with module inputs and outputs

- Describe variable scope within modules/child modules

- Discover modules from the public Terraform Module Registry

- Defining module version

125. Where do you find and explore terraform Modules?

The Terraform Registry makes it simple to find and use modules.The search query will look at module name, provider, and description to match your search terms. On the results page, filters can be used further refine search results.

126. How do you make sure that modules have stability and compatibility?

By default, only verified modules are shown in search results. Verified modules are reviewed by HashiCorp to ensure stability and compatibility. By using the filters, you can view unverified modules as well.

127. How do you download any modules?

You need to add any module in the configuration file like belowmodule "consul" {

source = "hashicorp/consul/aws"

version = "0.1.0"

}terraform init command will download and cache any modules referenced by a configuration.

128. What is the syntax for referencing a registry module?

<NAMESPACE>/<NAME>/<PROVIDER>// for example

module "consul" {

source = "hashicorp/consul/aws"

version = "0.1.0"

}

129. What is the syntax for referencing a private registry module?

<HOSTNAME>/<NAMESPACE>/<NAME>/<PROVIDER>// for example

module "vpc" {

source = "app.terraform.io/example_corp/vpc/aws"

version = "0.9.3"

}

130. The terraform recommends that all modules must follow semantic versioning. Is this true?

True131. What is a Terraform Module?

A Terraform module is a set of Terraform configuration files in a single directory. Even a simple configuration consisting of a single directory with one or more .tf files is a module.132. Why do we use modules for?

* Organize configuration

* Encapsulate configuration

* Re-use configuration

* Provide consistency and ensure best practiceshttps://learn.hashicorp.com/terraform/modules/modules-overview

133. How do you call modules in your configuration?

Your configuration can use module blocks to call modules in other directories. When Terraform encounters a module block, it loads and processes that module's configuration files.

134. How many ways you can load modules?

Local and remote modulesModules can either be loaded from the local filesystem, or a remote source. Terraform supports a variety of remote sources, including the Terraform Registry, most version control systems, HTTP URLs, and Terraform Cloud or Terraform Enterprise private module registries.

135. What are the best practices for using Modules?

1. Start writing your configuration with modules in mind. Even for modestly complex Terraform configurations managed by a single person, you'll find the benefits of using modules outweigh the time it takes to use them properly.2. Use local modules to organize and encapsulate your code. Even if you aren't using or publishing remote modules, organizing your configuration in terms of modules from the beginning will significantlty reduce the burden of maintaining and updating your configuration as your infrastructure grows in complexity.3. Use the public Terraform Registry to find useful modules. This way you can more quickly and confidently implement your configuration by relying on the work of others to implement common infrastructure scenarios.4. Publish and share modules with your team. Most infrastructure is managed by a team of people, and modules are important way that teams can work together to create and maintain infrastructure. As mentioned earlier, you can publish modules either publicly or privately. We will see how to do this in a future guide in this series.https://learn.hashicorp.com/terraform/modules/modules-overview#module-best-practices

136. What are the different source types for calling modules?

Local paths

Terraform Registry

GitHub

Generic Git, Mercurial repositories

Bitbucket

HTTP URLs

S3 buckets

GCS bucketshttps://www.terraform.io/docs/modules/sources.html

137. What are the arguments you need for using modules in your configuration?

source and version// example

module "consul" {

source = "hashicorp/consul/aws"

version = "0.1.0"

}

138. How do you set input variables for the modules?

The configuration that calls a module is responsible for setting its input values, which are passed as arguments in the module block. Aside from source and version, most of the arguments to a module block will set variable values.On the Terraform registry page for the AWS VPC module, you will see an Inputs tab that describes all of the input variables that module supports.

For example, we have defined a lot of input variables for the modules such as ads, cidr, name, etc

139. How do you access output variables from the modules?

You can access them by referringmodule.<MODULE NAME>.<OUTPUT NAME>

140. Where do you put output variables in the configuration?

Module outputs are usually either passed to other parts of your configuration, or defined as outputs in your root module. You will see both uses in this guide.Inside your configuration's directory, outputs.tf will need to contain:

141. How do you pass input variables in the configuration?

You can define variables.tf in the root foldervariable "vpc_name" {

description = "Name of VPC"

type = string

default = "example-vpc"

}

Then you can access these varibles in the configuration like thismodule "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "2.21.0"

name = var.vpc_name

cidr = var.vpc_cidr

azs = var.vpc_azs

private_subnets = var.vpc_private_subnets

public_subnets = var.vpc_public_subnets

enable_nat_gateway = var.vpc_enable_nat_gateway

tags = var.vpc_tags

}

142. What is the child module?

A module that is called by another configuration is sometimes referred to as a "child module" of that configuration.143. When you use local modules you don’t have to do the command init or get every time there is a change in the local module. why?

When installing a local module, Terraform will instead refer directly to the source directory. Because of this, Terraform will automatically notice changes to local modules without having to re-run terraform init or terraform get.

144. When you use remote modules what should you do if there is a change in the module?

When installing a remote module, Terraform will download it into the .terraform directory in your configuration's root directory.You should initialize with terraform init

145. A simple configuration consisting of a single directory with one or more .tf files is a module. Is this true?

True146. When using a new module for the first time, you must run either terraform init or terraform get to install the module. Is this true?

True147. When installing the modules and where does the terraform save these modules?

.terraform/modules// Example.terraform/modules

├── ec2_instances

│ └── terraform-aws-modules-terraform-aws-ec2-instance-ed6dcd9

├── modules.json

└── vpc

└── terraform-aws-modules-terraform-aws-vpc-2417f60

148. What is the required argument for the module?

sourceAll modules require a source argument, which is a meta-argument defined by Terraform CLI. Its value is either the path to a local directory of the module's configuration files, or a remote module source that Terraform should download and use. This value must be a literal string with no template sequences; arbitrary expressions are not allowed. For more information on possible values for this argument, see Module Sources.

149. What are the other optional meta-arguments along with the source when defining modules

version - (Optional) A version constraint string that specifies which versions of the referenced module are acceptable. The newest version matching the constraint will be used. version is supported only for modules retrieved from module registries.providers - (Optional) A map whose keys are provider configuration names that are expected by child module and whose values are corresponding provider names in the calling module. This allows provider configurations to be passed explicitly to child modules. If not specified, the child module inherits all of the default (un-aliased) provider configurations from the calling module.

Navigate Terraform workflow

Practice questions based on these concepts

- Describe Terraform workflow ( Write -> Plan -> Create )

- Initialize a Terraform working directory (terraform init)

- Validate a Terraform configuration (terraform validate)

- Generate and review an execution plan for Terraform (terraform plan)

- Execute changes to infrastructure with Terraform (terraform apply)

- Destroy Terraform managed infrastructure (terraform destroy)

150. What is the Core Terraform workflow?

The core Terraform workflow has three steps:1. Write - Author infrastructure as code.

2. Plan - Preview changes before applying.

3. Apply - Provision reproducible infrastructure.

151. What is the workflow when you work as an Individual Practitioner?

https://www.terraform.io/guides/core-workflow.html#working-as-an-individual-practitioner152. What is the workflow when you work as a team?

https://www.terraform.io/guides/core-workflow.html#working-as-a-team153. What is the workflow when you work as a large organization?

https://www.terraform.io/guides/core-workflow.html#the-core-workflow-enhanced-by-terraform-cloud154. What is the command init?

The terraform init command is used to initialize a working directory containing Terraform configuration files. This is the first command that should be run after writing a new Terraform configuration or cloning an existing one from version control. It is safe to run this command multiple times.

155. You recently joined a team and you cloned a terraform configuration files from the version control system. What is the first command you should use?

terraform initThis command performs several different initialization steps in order to prepare a working directory for use.This command is always safe to run multiple times, to bring the working directory up to date with changes in the configuration. Though subsequent runs may give errors, this command will never delete your existing configuration or state.If no arguments are given, the configuration in the current working directory is initialized. It is recommended to run Terraform with the current working directory set to the root directory of the configuration, and omit the DIR argument.https://www.terraform.io/docs/commands/init.html

156. What is the flag you should use to upgrade modules and plugins a part of their respective installation steps?

upgradeterraform init -upgrade

157. When you are doing initialization with terraform init, you want to skip backend initialization. What should you do?

terraform init -backend=false158. When you are doing initialization with terraform init, you want to skip child module installation. What should you do?

terraform init -get=false159. When you are doing initialization where do all the plugins stored?

On most operationg systems : ~/.terraform.d/plugins

on Windows : %APPDATA%\terraform.d\plugins160. When you are doing initialization with terraform init, you want to skip plugin installation. What should you do?

terraform init -get-plugins=falseSkips plugin installation. Terraform will use plugins installed in the user plugins directory, and any plugins already installed for the current working directory. If the installed plugins aren't sufficient for the configuration, init fails.

161. What does the command terraform validate does?

The terraform validate command validates the configuration files in a directory, referring only to the configuration and not accessing any remote services such as remote state, provider APIs, etc.Validate runs checks that verify whether a configuration is syntactically valid and internally consistent, regardless of any provided variables or existing state. It is thus primarily useful for general verification of reusable modules, including correctness of attribute names and value types.https://www.terraform.io/docs/commands/validate.html

162. What does the command plan do?